mirror of

https://github.com/Infisical/infisical.git

synced 2025-04-10 07:25:40 +00:00

Compare commits

160 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

| 2bff7bbb5a | |||

| b09ae054dd | |||

| dc9c6b9d13 | |||

| f01e8cb33b | |||

| 3ba62d1d97 | |||

| 5816ce3bf7 | |||

| 2d77fe9ca3 | |||

| 6bb24933bf | |||

| fbc38c553a | |||

| 6ba4701db8 | |||

| c15a9301af | |||

| 91052df5f9 | |||

| 6ea26c135a | |||

| 5444382d5a | |||

| 200bb12ad8 | |||

| 1447e055d1 | |||

| 4dac03ab94 | |||

| 20ea50bfaf | |||

| 5474096ca9 | |||

| 06c1827f38 | |||

| 9b7f036fd0 | |||

| 547555591b | |||

| 516819507a | |||

| 02e5be20c2 | |||

| de11c50563 | |||

| 33dddd440c | |||

| 19b909cd12 | |||

| cd59ca745d | |||

| e013a4ab93 | |||

| 19daf1410a | |||

| 4c29c88fde | |||

| 6af59e47f5 | |||

| 8183e61403 | |||

| b4c616edd6 | |||

| c12eeac9b3 | |||

| 033275ed69 | |||

| a799e1bffc | |||

| 36300cd19d | |||

| c8633bf546 | |||

| 7fe2e15a98 | |||

| 72bf160f2e | |||

| 0ef9db99b4 | |||

| 805f733499 | |||

| 595dc78e75 | |||

| 6c7d232a9e | |||

| 35fd1520e2 | |||

| 3626ef2ec2 | |||

| a49fcf49f1 | |||

| 787e54fb91 | |||

| 90537f2e6d | |||

| c9448656bf | |||

| f3900213b5 | |||

| fe17d8459b | |||

| 2f54c4dd7e | |||

| c33b043f5f | |||

| 5db60c0dad | |||

| 1a3d3906da | |||

| d86c335671 | |||

| 62f0b3f6df | |||

| 3e623922b4 | |||

| d1c38513f7 | |||

| 63253d515f | |||

| 584d309b80 | |||

| 07bb3496e7 | |||

| c83c75db96 | |||

| bcd18ab0af | |||

| 1ea75eb840 | |||

| 271c810692 | |||

| dd05e2ac01 | |||

| c6c2cfaaa5 | |||

| 6f90064400 | |||

| 397c15d61e | |||

| 9e3ac6c31d | |||

| 10d57e9d88 | |||

| 95a1e9560e | |||

| 11e0790f13 | |||

| 099ddd6805 | |||

| 182db69ee1 | |||

| 01982b585f | |||

| 752ebaa3eb | |||

| 117acce3f6 | |||

| f94bf1f206 | |||

| 74d5586005 | |||

| 8896e1232b | |||

| d456dcef28 | |||

| eae2fc813a | |||

| 7cdafe0eed | |||

| d410b42a34 | |||

| bacf9f2d91 | |||

| 3fc6b0c194 | |||

| 9dc1645559 | |||

| 158c51ff3c | |||

| 864757e428 | |||

| fa53a9e41d | |||

| 36372ebef3 | |||

| b3a50d657d | |||

| c2f5f19f55 | |||

| 2b6e69ce1b | |||

| c4b4829694 | |||

| d503102f75 | |||

| 4a14753b8c | |||

| 2c834040b4 | |||

| 6f682250b6 | |||

| 52285a1f38 | |||

| 31d6191251 | |||

| d14ed06d4f | |||

| e2a84ce52e | |||

| 999f668f39 | |||

| 6b546034f4 | |||

| c2eaea21f0 | |||

| 436f408fa8 | |||

| da999107f3 | |||

| 00ea296138 | |||

| 968af64ee7 | |||

| 589e811e9b | |||

| 4f312cfd1a | |||

| 3dccfc5404 | |||

| 2b3a996114 | |||

| 93da106dbc | |||

| 7e544fcac8 | |||

| c2eac43b4f | |||

| 138acd28e8 | |||

| 1195398f15 | |||

| 1fff273abb | |||

| b586fcfd2e | |||

| 45ad639eaf | |||

| e5342bd757 | |||

| 834c32aa7e | |||

| df4dcc87e7 | |||

| d883c7ea96 | |||

| 5ed02955f8 | |||

| 064a9eb9cb | |||

| f805691c4f | |||

| 4548931df3 | |||

| 6d1dc3845b | |||

| 40b42fdcb5 | |||

| ad907fa373 | |||

| ae1088d3f6 | |||

| 9a3caac75f | |||

| 1808ab6db8 | |||

| aa554405c1 | |||

| cd70128ff8 | |||

| f49fe3962d | |||

| 9ee0c8f1b7 | |||

| a9bd878057 | |||

| a58f91f06b | |||

| 059f15b172 | |||

| caddb45394 | |||

| 8266c4dd6d | |||

| 9f82220f4e | |||

| 3d25baa319 | |||

| a8dfcae777 | |||

| 228c8a7609 | |||

| a763d8b8ed | |||

| d4e0a4992c | |||

| 1757f0d690 | |||

| b25908d91f | |||

| 68d51d402a | |||

| aa218d2ddc | |||

| 46fe724012 |

@ -40,14 +40,19 @@ SITE_URL=http://localhost:8080

|

||||

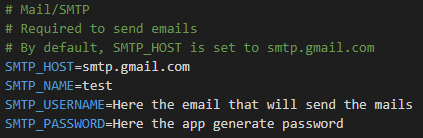

# Required to send emails

|

||||

# By default, SMTP_HOST is set to smtp.gmail.com

|

||||

SMTP_HOST=smtp.gmail.com

|

||||

SMTP_PORT=587

|

||||

SMTP_NAME=Team

|

||||

SMTP_USERNAME=team@infisical.com

|

||||

SMTP_PASSWORD=

|

||||

|

||||

# Integration

|

||||

# Optional only if integration is used

|

||||

OAUTH_CLIENT_SECRET_HEROKU=

|

||||

OAUTH_TOKEN_URL_HEROKU=

|

||||

CLIENT_ID_HEROKU=

|

||||

CLIENT_ID_VERCEL=

|

||||

CLIENT_ID_NETLIFY=

|

||||

CLIENT_SECRET_HEROKU=

|

||||

CLIENT_SECRET_VERCEL=

|

||||

CLIENT_SECRET_NETLIFY=

|

||||

|

||||

# Sentry (optional) for monitoring errors

|

||||

SENTRY_DSN=

|

||||

|

||||

3

.eslintignore

Normal file

3

.eslintignore

Normal file

@ -0,0 +1,3 @@

|

||||

node_modules

|

||||

built

|

||||

healthcheck.js

|

||||

30

.github/resources/docker-compose.be-test.yml

vendored

Normal file

30

.github/resources/docker-compose.be-test.yml

vendored

Normal file

@ -0,0 +1,30 @@

|

||||

version: '3'

|

||||

|

||||

services:

|

||||

backend:

|

||||

container_name: infisical-backend-test

|

||||

restart: unless-stopped

|

||||

depends_on:

|

||||

- mongo

|

||||

image: infisical/backend:test

|

||||

command: npm run start

|

||||

environment:

|

||||

- NODE_ENV=production

|

||||

- MONGO_URL=mongodb://test:example@mongo:27017/?authSource=admin

|

||||

- MONGO_USERNAME=test

|

||||

- MONGO_PASSWORD=example

|

||||

networks:

|

||||

- infisical-test

|

||||

|

||||

mongo:

|

||||

container_name: infisical-mongo-test

|

||||

image: mongo

|

||||

restart: always

|

||||

environment:

|

||||

- MONGO_INITDB_ROOT_USERNAME=test

|

||||

- MONGO_INITDB_ROOT_PASSWORD=example

|

||||

networks:

|

||||

- infisical-test

|

||||

|

||||

networks:

|

||||

infisical-test:

|

||||

26

.github/resources/healthcheck.sh

vendored

Executable file

26

.github/resources/healthcheck.sh

vendored

Executable file

@ -0,0 +1,26 @@

|

||||

# Name of the target container to check

|

||||

container_name="$1"

|

||||

# Timeout in seconds. Default: 60

|

||||

timeout=$((${2:-60}));

|

||||

|

||||

if [ -z $container_name ]; then

|

||||

echo "No container name specified";

|

||||

exit 1;

|

||||

fi

|

||||

|

||||

echo "Container: $container_name";

|

||||

echo "Timeout: $timeout sec";

|

||||

|

||||

try=0;

|

||||

is_healthy="false";

|

||||

while [ $is_healthy != "true" ];

|

||||

do

|

||||

try=$(($try + 1));

|

||||

printf "■";

|

||||

is_healthy=$(docker inspect --format='{{json .State.Health}}' $container_name | jq '.Status == "healthy"');

|

||||

sleep 1;

|

||||

if [[ $try -eq $timeout ]]; then

|

||||

echo " Container was not ready within timeout";

|

||||

exit 1;

|

||||

fi

|

||||

done

|

||||

88

.github/workflows/docker-image.yml

vendored

88

.github/workflows/docker-image.yml

vendored

@ -3,40 +3,38 @@ name: Push to Docker Hub

|

||||

on: [workflow_dispatch]

|

||||

|

||||

jobs:

|

||||

|

||||

backend-image:

|

||||

name: Build backend image

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

-

|

||||

name: ☁️ Checkout source

|

||||

- name: ☁️ Checkout source

|

||||

uses: actions/checkout@v3

|

||||

-

|

||||

name: 🔧 Set up QEMU

|

||||

- name: 🔧 Set up QEMU

|

||||

uses: docker/setup-qemu-action@v2

|

||||

-

|

||||

name: 🔧 Set up Docker Buildx

|

||||

- name: 🔧 Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v2

|

||||

-

|

||||

name: 🐋 Login to Docker Hub

|

||||

- name: 🐋 Login to Docker Hub

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

# -

|

||||

# name: 📦 Build backend and export to Docker

|

||||

# uses: docker/build-push-action@v3

|

||||

# with:

|

||||

# load: true

|

||||

# context: backend

|

||||

# tags: infisical/backend:test

|

||||

# -

|

||||

# name: 🧪 Test backend image

|

||||

# run: |

|

||||

# docker run --rm infisical/backend:test

|

||||

-

|

||||

name: 🏗️ Build backend and push

|

||||

- name: 📦 Build backend and export to Docker

|

||||

uses: docker/build-push-action@v3

|

||||

with:

|

||||

load: true

|

||||

context: backend

|

||||

tags: infisical/backend:test

|

||||

- name: ⏻ Spawn backend container and dependencies

|

||||

run: |

|

||||

docker compose -f .github/resources/docker-compose.be-test.yml up --wait --quiet-pull

|

||||

- name: 🧪 Test backend image

|

||||

run: |

|

||||

./.github/resources/healthcheck.sh infisical-backend-test

|

||||

- name: ⏻ Shut down backend container and dependencies

|

||||

run: |

|

||||

docker compose -f .github/resources/docker-compose.be-test.yml down

|

||||

- name: 🏗️ Build backend and push

|

||||

uses: docker/build-push-action@v3

|

||||

with:

|

||||

push: true

|

||||

@ -44,42 +42,40 @@ jobs:

|

||||

tags: infisical/backend:latest

|

||||

platforms: linux/amd64,linux/arm64

|

||||

|

||||

|

||||

frontend-image:

|

||||

name: Build frontend image

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

-

|

||||

name: ☁️ Checkout source

|

||||

- name: ☁️ Checkout source

|

||||

uses: actions/checkout@v3

|

||||

-

|

||||

name: 🔧 Set up QEMU

|

||||

- name: 🔧 Set up QEMU

|

||||

uses: docker/setup-qemu-action@v2

|

||||

-

|

||||

name: 🔧 Set up Docker Buildx

|

||||

- name: 🔧 Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v2

|

||||

-

|

||||

name: 🐋 Login to Docker Hub

|

||||

- name: 🐋 Login to Docker Hub

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

# -

|

||||

# name: 📦 Build frontend and export to Docker

|

||||

# uses: docker/build-push-action@v3

|

||||

# with:

|

||||

# load: true

|

||||

# context: frontend

|

||||

# tags: infisical/frontend:test

|

||||

# build-args: |

|

||||

# POSTHOG_API_KEY=${{ secrets.PUBLIC_POSTHOG_API_KEY }}

|

||||

# -

|

||||

# name: 🧪 Test frontend image

|

||||

# run: |

|

||||

# docker run --rm infisical/frontend:test

|

||||

-

|

||||

name: 🏗️ Build frontend and push

|

||||

- name: 📦 Build frontend and export to Docker

|

||||

uses: docker/build-push-action@v3

|

||||

with:

|

||||

load: true

|

||||

context: frontend

|

||||

tags: infisical/frontend:test

|

||||

build-args: |

|

||||

POSTHOG_API_KEY=${{ secrets.PUBLIC_POSTHOG_API_KEY }}

|

||||

- name: ⏻ Spawn frontend container

|

||||

run: |

|

||||

docker run -d --rm --name infisical-frontend-test infisical/frontend:test

|

||||

- name: 🧪 Test frontend image

|

||||

run: |

|

||||

./.github/resources/healthcheck.sh infisical-frontend-test

|

||||

- name: ⏻ Shut down frontend container

|

||||

run: |

|

||||

docker stop infisical-frontend-test

|

||||

- name: 🏗️ Build frontend and push

|

||||

uses: docker/build-push-action@v3

|

||||

with:

|

||||

push: true

|

||||

|

||||

29

.github/workflows/release_docker_k8_operator.yaml

vendored

Normal file

29

.github/workflows/release_docker_k8_operator.yaml

vendored

Normal file

@ -0,0 +1,29 @@

|

||||

name: Release Docker image for K8 operator

|

||||

on: [workflow_dispatch]

|

||||

|

||||

jobs:

|

||||

release:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

|

||||

- name: 🔧 Set up QEMU

|

||||

uses: docker/setup-qemu-action@v1

|

||||

|

||||

- name: 🔧 Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v1

|

||||

|

||||

- name: 🐋 Login to Docker Hub

|

||||

uses: docker/login-action@v1

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Build and push

|

||||

id: docker_build

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

context: k8-operator

|

||||

push: true

|

||||

platforms: linux/amd64,linux/arm64

|

||||

tags: infisical/kubernetes-operator:latest

|

||||

5

.husky/pre-commit

Executable file

5

.husky/pre-commit

Executable file

@ -0,0 +1,5 @@

|

||||

|

||||

#!/usr/bin/env sh

|

||||

. "$(dirname -- "$0")/_/husky.sh"

|

||||

|

||||

npx lint-staged

|

||||

62

README.md

62

README.md

@ -1,5 +1,5 @@

|

||||

<h1 align="center">

|

||||

<img width="300" src="/img/logoname-black.svg#gh-light-mode-only" alt="ifnisical">

|

||||

<img width="300" src="/img/logoname-black.svg#gh-light-mode-only" alt="infisical">

|

||||

<img width="300" src="/img/logoname-white.svg#gh-dark-mode-only" alt="infisical">

|

||||

</h1>

|

||||

<p align="center">

|

||||

@ -27,6 +27,9 @@

|

||||

<a href="https://join.slack.com/t/infisical-users/shared_invite/zt-1kdbk07ro-RtoyEt_9E~fyzGo_xQYP6g">

|

||||

<img src="https://img.shields.io/badge/chat-on%20Slack-blueviolet" alt="Slack community channel" />

|

||||

</a>

|

||||

<a href="https://twitter.com/infisical">

|

||||

<img src="https://img.shields.io/twitter/follow/infisical?label=Follow" alt="Infisical Twitter" />

|

||||

</a>

|

||||

</h4>

|

||||

|

||||

<img src="/img/infisical_github_repo.png" width="100%" alt="Dashboard" />

|

||||

@ -60,7 +63,7 @@ To quickly get started, visit our [get started guide](https://infisical.com/docs

|

||||

|

||||

## 🔥 What's cool about this?

|

||||

|

||||

Infisical makes secret management simple and end-to-end encrypted by default. We're on a mission to make it more accessible to all developers, <i>not just security teams</i>.

|

||||

Infisical makes secret management simple and end-to-end encrypted by default. We're on a mission to make it more accessible to all developers, <i>not just security teams</i>.

|

||||

|

||||

According to a [report](https://www.ekransystem.com/en/blog/secrets-management) in 2019, only 10% of organizations use secret management solutions despite all using digital secrets to some extent.

|

||||

|

||||

@ -73,6 +76,7 @@ We are currently working hard to make Infisical more extensive. Need any integra

|

||||

Whether it's big or small, we love contributions ❤️ Check out our guide to see how to [get started](https://infisical.com/docs/contributing/overview).

|

||||

|

||||

Not sure where to get started? You can:

|

||||

|

||||

- [Book a free, non-pressure pairing sessions with one of our teammates](mailto:tony@infisical.com?subject=Pairing%20session&body=I'd%20like%20to%20do%20a%20pairing%20session!)!

|

||||

- Join our <a href="https://join.slack.com/t/infisical-users/shared_invite/zt-1kdbk07ro-RtoyEt_9E~fyzGo_xQYP6g">Slack</a>, and ask us any questions there.

|

||||

|

||||

@ -81,7 +85,7 @@ Not sure where to get started? You can:

|

||||

- [Slack](https://join.slack.com/t/infisical-users/shared_invite/zt-1kdbk07ro-RtoyEt_9E~fyzGo_xQYP6g) (For live discussion with the community and the Infisical team)

|

||||

- [GitHub Discussions](https://github.com/Infisical/infisical/discussions) (For help with building and deeper conversations about features)

|

||||

- [GitHub Issues](https://github.com/Infisical/infisical-cli/issues) (For any bugs and errors you encounter using Infisical)

|

||||

- [Twitter](https://twitter.com/infisical) (Get news fast)

|

||||

- [Twitter](https://twitter.com/infisical) (Get news fast)

|

||||

|

||||

## 🐥 Status

|

||||

|

||||

@ -91,12 +95,6 @@ Not sure where to get started? You can:

|

||||

|

||||

We're currently in Public Alpha.

|

||||

|

||||

## 🚨 Stay Up-to-Date

|

||||

|

||||

Infisical officially launched as v.1.0 on November 21st, 2022. However, a lot of new features are coming very quickly. Watch **releases** of this repository to be notified about future updates:

|

||||

|

||||

|

||||

|

||||

## 🔌 Integrations

|

||||

|

||||

We're currently setting the foundation and building [integrations](https://infisical.com/docs/integrations/overview) so secrets can be synced everywhere. Any help is welcome! :)

|

||||

@ -130,10 +128,14 @@ We're currently setting the foundation and building [integrations](https://infis

|

||||

</tr>

|

||||

<tr>

|

||||

<td align="left" valign="middle">

|

||||

🔜 Vercel (https://github.com/Infisical/infisical/issues/60)

|

||||

<a href="https://infisical.com/docs/integrations/cloud/vercel?ref=github.com">

|

||||

✔️ Vercel

|

||||

</a>

|

||||

</td>

|

||||

<td align="left" valign="middle">

|

||||

🔜 GitLab CI/CD

|

||||

<a href="https://infisical.com/docs/integrations/platforms/kubernetes?ref=github.com">

|

||||

✔️ Kubernetes

|

||||

</a>

|

||||

</td>

|

||||

<td align="left" valign="middle">

|

||||

🔜 Fly.io

|

||||

@ -155,7 +157,7 @@ We're currently setting the foundation and building [integrations](https://infis

|

||||

🔜 GCP

|

||||

</td>

|

||||

<td align="left" valign="middle">

|

||||

🔜 Kubernetes

|

||||

🔜 GitLab CI/CD

|

||||

</td>

|

||||

<td align="left" valign="middle">

|

||||

🔜 CircleCI

|

||||

@ -179,6 +181,20 @@ We're currently setting the foundation and building [integrations](https://infis

|

||||

<td align="left" valign="middle">

|

||||

🔜 Netlify (https://github.com/Infisical/infisical/issues/55)

|

||||

</td>

|

||||

<td align="left" valign="middle">

|

||||

🔜 Railway

|

||||

</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td align="left" valign="middle">

|

||||

🔜 Bitbucket

|

||||

</td>

|

||||

<td align="left" valign="middle">

|

||||

🔜 Supabase

|

||||

</td>

|

||||

<td align="left" valign="middle">

|

||||

🔜 Serverless

|

||||

</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

@ -186,7 +202,6 @@ We're currently setting the foundation and building [integrations](https://infis

|

||||

</td>

|

||||

<td>

|

||||

|

||||

|

||||

<table>

|

||||

<tbody>

|

||||

<tr>

|

||||

@ -261,6 +276,18 @@ We're currently setting the foundation and building [integrations](https://infis

|

||||

</a>

|

||||

</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td align="left" valign="middle">

|

||||

<a href="https://infisical.com/docs/integrations/frameworks/fiber?ref=github.com">

|

||||

✔️ Fiber

|

||||

</a>

|

||||

</td>

|

||||

<td align="left" valign="middle">

|

||||

<a href="https://infisical.com/docs/integrations/frameworks/nuxt?ref=github.com">

|

||||

✔️ Nuxt

|

||||

</a>

|

||||

</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

|

||||

@ -268,7 +295,6 @@ We're currently setting the foundation and building [integrations](https://infis

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

|

||||

## 🏘 Open-source vs. paid

|

||||

|

||||

This repo is entirely MIT licensed, with the exception of the `ee` directory which will contain premium enterprise features requiring a Infisical license in the future. We're currently focused on developing non-enterprise offerings first that should suit most use-cases.

|

||||

@ -277,6 +303,12 @@ This repo is entirely MIT licensed, with the exception of the `ee` directory whi

|

||||

|

||||

Looking to report a security vulnerability? Please don't post about it in GitHub issue. Instead, refer to our [SECURITY.md](./SECURITY.md) file.

|

||||

|

||||

## 🚨 Stay Up-to-Date

|

||||

|

||||

Infisical officially launched as v.1.0 on November 21st, 2022. However, a lot of new features are coming very quickly. Watch **releases** of this repository to be notified about future updates:

|

||||

|

||||

|

||||

|

||||

## 🦸 Contributors

|

||||

|

||||

[//]: contributor-faces

|

||||

@ -285,4 +317,4 @@ Looking to report a security vulnerability? Please don't post about it in GitHub

|

||||

<!-- prettier-ignore-start -->

|

||||

<!-- markdownlint-disable -->

|

||||

|

||||

<a href="https://github.com/dangtony98"><img src="https://avatars.githubusercontent.com/u/25857006?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/mv-turtle"><img src="https://avatars.githubusercontent.com/u/78047717?s=96&v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/maidul98"><img src="https://avatars.githubusercontent.com/u/9300960?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/gangjun06"><img src="https://avatars.githubusercontent.com/u/50910815?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/reginaldbondoc"><img src="https://avatars.githubusercontent.com/u/7693108?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/SH5H"><img src="https://avatars.githubusercontent.com/u/25437192?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/asharonbaltazar"><img src="https://avatars.githubusercontent.com/u/58940073?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/edgarrmondragon"><img src="https://avatars.githubusercontent.com/u/16805946?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/hanywang2"><img src="https://avatars.githubusercontent.com/u/44352119?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/tobias-mintlify"><img src="https://avatars.githubusercontent.com/u/110702161?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/0xflotus"><img src="https://avatars.githubusercontent.com/u/26602940?v=4" width="50" height="50" alt=""/></a>

|

||||

<a href="https://github.com/dangtony98"><img src="https://avatars.githubusercontent.com/u/25857006?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/mv-turtle"><img src="https://avatars.githubusercontent.com/u/78047717?s=96&v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/maidul98"><img src="https://avatars.githubusercontent.com/u/9300960?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/gangjun06"><img src="https://avatars.githubusercontent.com/u/50910815?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/reginaldbondoc"><img src="https://avatars.githubusercontent.com/u/7693108?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/SH5H"><img src="https://avatars.githubusercontent.com/u/25437192?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/asharonbaltazar"><img src="https://avatars.githubusercontent.com/u/58940073?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/edgarrmondragon"><img src="https://avatars.githubusercontent.com/u/16805946?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/arjunyel"><img src="https://avatars.githubusercontent.com/u/11153289?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/LemmyMwaura"><img src="https://avatars.githubusercontent.com/u/20738858?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/adrianmarinwork"><img src="https://avatars.githubusercontent.com/u/118568289?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/hanywang2"><img src="https://avatars.githubusercontent.com/u/44352119?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/tobias-mintlify"><img src="https://avatars.githubusercontent.com/u/110702161?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/0xflotus"><img src="https://avatars.githubusercontent.com/u/26602940?v=4" width="50" height="50" alt=""/></a> <a href="https://github.com/wanjohiryan"><img src="https://avatars.githubusercontent.com/u/71614375?v=4" width="50" height="50" alt=""/></a>

|

||||

|

||||

@ -1,18 +1,12 @@

|

||||

{

|

||||

"root": true,

|

||||

"parser": "@typescript-eslint/parser",

|

||||

"plugins": [

|

||||

"@typescript-eslint",

|

||||

"prettier"

|

||||

],

|

||||

"extends": [

|

||||

"eslint:recommended",

|

||||

"plugin:@typescript-eslint/eslint-recommended",

|

||||

"plugin:@typescript-eslint/recommended",

|

||||

"prettier"

|

||||

],

|

||||

"rules": {

|

||||

"no-console": 2,

|

||||

"prettier/prettier": 2

|

||||

}

|

||||

}

|

||||

"parser": "@typescript-eslint/parser",

|

||||

"plugins": ["@typescript-eslint"],

|

||||

"extends": [

|

||||

"eslint:recommended",

|

||||

"plugin:@typescript-eslint/eslint-recommended",

|

||||

"plugin:@typescript-eslint/recommended"

|

||||

],

|

||||

"rules": {

|

||||

"no-console": 2

|

||||

}

|

||||

}

|

||||

|

||||

@ -1,7 +0,0 @@

|

||||

{

|

||||

"semi": true,

|

||||

"trailingComma": "none",

|

||||

"singleQuote": true,

|

||||

"printWidth": 80,

|

||||

"useTabs": true

|

||||

}

|

||||

@ -2,11 +2,14 @@ FROM node:16-bullseye-slim

|

||||

|

||||

WORKDIR /app

|

||||

|

||||

COPY package*.json .

|

||||

COPY package.json package-lock.json ./

|

||||

|

||||

RUN npm install

|

||||

RUN npm ci --only-production --ignore-scripts

|

||||

|

||||

COPY . .

|

||||

|

||||

CMD ["npm", "run", "start"]

|

||||

HEALTHCHECK --interval=10s --timeout=3s --start-period=10s \

|

||||

CMD node healthcheck.js

|

||||

|

||||

|

||||

CMD ["npm", "run", "start"]

|

||||

|

||||

8

backend/environment.d.ts

vendored

8

backend/environment.d.ts

vendored

@ -14,8 +14,12 @@ declare global {

|

||||

JWT_SIGNUP_SECRET: string;

|

||||

MONGO_URL: string;

|

||||

NODE_ENV: 'development' | 'staging' | 'testing' | 'production';

|

||||

OAUTH_CLIENT_SECRET_HEROKU: string;

|

||||

OAUTH_TOKEN_URL_HEROKU: string;

|

||||

CLIENT_ID_HEROKU: string;

|

||||

CLIENT_ID_VERCEL: string;

|

||||

CLIENT_ID_NETLIFY: string;

|

||||

CLIENT_SECRET_HEROKU: string;

|

||||

CLIENT_SECRET_VERCEL: string;

|

||||

CLIENT_SECRET_NETLIFY: string;

|

||||

POSTHOG_HOST: string;

|

||||

POSTHOG_PROJECT_API_KEY: string;

|

||||

PRIVATE_KEY: string;

|

||||

|

||||

24

backend/healthcheck.js

Normal file

24

backend/healthcheck.js

Normal file

@ -0,0 +1,24 @@

|

||||

const http = require('http');

|

||||

const PORT = process.env.PORT || 4000;

|

||||

const options = {

|

||||

host: 'localhost',

|

||||

port: PORT,

|

||||

timeout: 2000,

|

||||

path: '/healthcheck'

|

||||

};

|

||||

|

||||

const healthCheck = http.request(options, (res) => {

|

||||

console.log(`HEALTHCHECK STATUS: ${res.statusCode}`);

|

||||

if (res.statusCode == 200) {

|

||||

process.exit(0);

|

||||

} else {

|

||||

process.exit(1);

|

||||

}

|

||||

});

|

||||

|

||||

healthCheck.on('error', function (err) {

|

||||

console.error(`HEALTH CHECK ERROR: ${err}`);

|

||||

process.exit(1);

|

||||

});

|

||||

|

||||

healthCheck.end();

|

||||

384

backend/package-lock.json

generated

384

backend/package-lock.json

generated

@ -9,6 +9,7 @@

|

||||

"version": "1.0.0",

|

||||

"license": "ISC",

|

||||

"dependencies": {

|

||||

"@godaddy/terminus": "^4.11.2",

|

||||

"@sentry/node": "^7.14.0",

|

||||

"@sentry/tracing": "^7.19.0",

|

||||

"@types/crypto-js": "^4.1.1",

|

||||

@ -28,7 +29,7 @@

|

||||

"mongoose": "^6.7.2",

|

||||

"nodemailer": "^6.8.0",

|

||||

"posthog-node": "^2.1.0",

|

||||

"query-string": "^7.1.1",

|

||||

"query-string": "^7.1.3",

|

||||

"rimraf": "^3.0.2",

|

||||

"stripe": "^10.7.0",

|

||||

"tweetnacl": "^1.0.3",

|

||||

@ -48,14 +49,10 @@

|

||||

"@typescript-eslint/eslint-plugin": "^5.40.1",

|

||||

"@typescript-eslint/parser": "^5.40.1",

|

||||

"eslint": "^8.26.0",

|

||||

"eslint-config-prettier": "^8.5.0",

|

||||

"eslint-plugin-prettier": "^4.2.1",

|

||||

"husky": "^8.0.1",

|

||||

"install": "^0.13.0",

|

||||

"jest": "^29.3.1",

|

||||

"nodemon": "^2.0.19",

|

||||

"npm": "^8.19.3",

|

||||

"prettier": "^2.7.1",

|

||||

"ts-node": "^10.9.1"

|

||||

}

|

||||

},

|

||||

@ -2029,6 +2026,14 @@

|

||||

"url": "https://opencollective.com/eslint"

|

||||

}

|

||||

},

|

||||

"node_modules/@godaddy/terminus": {

|

||||

"version": "4.11.2",

|

||||

"resolved": "https://registry.npmjs.org/@godaddy/terminus/-/terminus-4.11.2.tgz",

|

||||

"integrity": "sha512-e/kbOWpGKME42eltM/wXM3RxSUOrfureZxEd6Dt6NXyFoJ7E8lnmm7znXydJsL3B7ky4HRFZI+eHrep54NZbeQ==",

|

||||

"dependencies": {

|

||||

"stoppable": "^1.1.0"

|

||||

}

|

||||

},

|

||||

"node_modules/@humanwhocodes/config-array": {

|

||||

"version": "0.11.7",

|

||||

"resolved": "https://registry.npmjs.org/@humanwhocodes/config-array/-/config-array-0.11.7.tgz",

|

||||

@ -2594,19 +2599,6 @@

|

||||

"@maxmind/geoip2-node": "^3.4.0"

|

||||

}

|

||||

},

|

||||

"node_modules/@sentry/core": {

|

||||

"version": "7.17.4",

|

||||

"resolved": "https://registry.npmjs.org/@sentry/core/-/core-7.17.4.tgz",

|

||||

"integrity": "sha512-U3ABSJBKGK8dJ01nEG2+qNOb6Wv7U3VqoajiZxfV4lpPWNFGCoEhiTytxBlFTOCmdUH8209zSZiWJZaDLy+TSA==",

|

||||

"dependencies": {

|

||||

"@sentry/types": "7.17.4",

|

||||

"@sentry/utils": "7.17.4",

|

||||

"tslib": "^1.9.3"

|

||||

},

|

||||

"engines": {

|

||||

"node": ">=8"

|

||||

}

|

||||

},

|

||||

"node_modules/@sentry/node": {

|

||||

"version": "7.19.0",

|

||||

"resolved": "https://registry.npmjs.org/@sentry/node/-/node-7.19.0.tgz",

|

||||

@ -2704,26 +2696,6 @@

|

||||

"node": ">=8"

|

||||

}

|

||||

},

|

||||

"node_modules/@sentry/types": {

|

||||

"version": "7.17.4",

|

||||

"resolved": "https://registry.npmjs.org/@sentry/types/-/types-7.17.4.tgz",

|

||||

"integrity": "sha512-QJj8vO4AtxuzQfJIzDnECSmoxwnS+WJsm1Ta2Cwdy+TUCBJyWpW7aIJJGta76zb9gNPGb3UcAbeEjhMJBJeRMQ==",

|

||||

"engines": {

|

||||

"node": ">=8"

|

||||

}

|

||||

},

|

||||

"node_modules/@sentry/utils": {

|

||||

"version": "7.17.4",

|

||||

"resolved": "https://registry.npmjs.org/@sentry/utils/-/utils-7.17.4.tgz",

|

||||

"integrity": "sha512-ioG0ANy8uiWzig82/e7cc+6C9UOxkyBzJDi1luoQVDH6P0/PvM8GzVU+1iUVUipf8+OL1Jh09GrWnd5wLm3XNQ==",

|

||||

"dependencies": {

|

||||

"@sentry/types": "7.17.4",

|

||||

"tslib": "^1.9.3"

|

||||

},

|

||||

"engines": {

|

||||

"node": ">=8"

|

||||

}

|

||||

},

|

||||

"node_modules/@sinclair/typebox": {

|

||||

"version": "0.24.51",

|

||||

"resolved": "https://registry.npmjs.org/@sinclair/typebox/-/typebox-0.24.51.tgz",

|

||||

@ -2943,12 +2915,6 @@

|

||||

"@types/node": "*"

|

||||

}

|

||||

},

|

||||

"node_modules/@types/prettier": {

|

||||

"version": "2.7.1",

|

||||

"resolved": "https://registry.npmjs.org/@types/prettier/-/prettier-2.7.1.tgz",

|

||||

"integrity": "sha512-ri0UmynRRvZiiUJdiz38MmIblKK+oH30MztdBVR95dv/Ubw6neWSb8u1XpRb72L4qsZOhz+L+z9JD40SJmfWow==",

|

||||

"dev": true

|

||||

},

|

||||

"node_modules/@types/qs": {

|

||||

"version": "6.9.7",

|

||||

"resolved": "https://registry.npmjs.org/@types/qs/-/qs-6.9.7.tgz",

|

||||

@ -4035,9 +4001,9 @@

|

||||

}

|

||||

},

|

||||

"node_modules/decode-uri-component": {

|

||||

"version": "0.2.0",

|

||||

"resolved": "https://registry.npmjs.org/decode-uri-component/-/decode-uri-component-0.2.0.tgz",

|

||||

"integrity": "sha512-hjf+xovcEn31w/EUYdTXQh/8smFL/dzYjohQGEIgjyNavaJfBY2p5F527Bo1VPATxv0VYTUC2bOcXvqFwk78Og==",

|

||||

"version": "0.2.2",

|

||||

"resolved": "https://registry.npmjs.org/decode-uri-component/-/decode-uri-component-0.2.2.tgz",

|

||||

"integrity": "sha512-FqUYQ+8o158GyGTrMFJms9qh3CqTKvAqgqsTnkLI8sKu0028orqBhxNMFkFen0zGyg6epACD32pjVk58ngIErQ==",

|

||||

"engines": {

|

||||

"node": ">=0.10"

|

||||

}

|

||||

@ -4291,39 +4257,6 @@

|

||||

"url": "https://opencollective.com/eslint"

|

||||

}

|

||||

},

|

||||

"node_modules/eslint-config-prettier": {

|

||||

"version": "8.5.0",

|

||||

"resolved": "https://registry.npmjs.org/eslint-config-prettier/-/eslint-config-prettier-8.5.0.tgz",

|

||||

"integrity": "sha512-obmWKLUNCnhtQRKc+tmnYuQl0pFU1ibYJQ5BGhTVB08bHe9wC8qUeG7c08dj9XX+AuPj1YSGSQIHl1pnDHZR0Q==",

|

||||

"dev": true,

|

||||

"bin": {

|

||||

"eslint-config-prettier": "bin/cli.js"

|

||||

},

|

||||

"peerDependencies": {

|

||||

"eslint": ">=7.0.0"

|

||||

}

|

||||

},

|

||||

"node_modules/eslint-plugin-prettier": {

|

||||

"version": "4.2.1",

|

||||

"resolved": "https://registry.npmjs.org/eslint-plugin-prettier/-/eslint-plugin-prettier-4.2.1.tgz",

|

||||

"integrity": "sha512-f/0rXLXUt0oFYs8ra4w49wYZBG5GKZpAYsJSm6rnYL5uVDjd+zowwMwVZHnAjf4edNrKpCDYfXDgmRE/Ak7QyQ==",

|

||||

"dev": true,

|

||||

"dependencies": {

|

||||

"prettier-linter-helpers": "^1.0.0"

|

||||

},

|

||||

"engines": {

|

||||

"node": ">=12.0.0"

|

||||

},

|

||||

"peerDependencies": {

|

||||

"eslint": ">=7.28.0",

|

||||

"prettier": ">=2.0.0"

|

||||

},

|

||||

"peerDependenciesMeta": {

|

||||

"eslint-config-prettier": {

|

||||

"optional": true

|

||||

}

|

||||

}

|

||||

},

|

||||

"node_modules/eslint-scope": {

|

||||

"version": "5.1.1",

|

||||

"resolved": "https://registry.npmjs.org/eslint-scope/-/eslint-scope-5.1.1.tgz",

|

||||

@ -4641,12 +4574,6 @@

|

||||

"integrity": "sha512-f3qQ9oQy9j2AhBe/H9VC91wLmKBCCU/gDOnKNAYG5hswO7BLKj09Hc5HYNz9cGI++xlpDCIgDaitVs03ATR84Q==",

|

||||

"dev": true

|

||||

},

|

||||

"node_modules/fast-diff": {

|

||||

"version": "1.2.0",

|

||||

"resolved": "https://registry.npmjs.org/fast-diff/-/fast-diff-1.2.0.tgz",

|

||||

"integrity": "sha512-xJuoT5+L99XlZ8twedaRf6Ax2TgQVxvgZOYoPKqZufmJib0tL2tegPBOZb1pVNgIhlqDlA0eO0c3wBvQcmzx4w==",

|

||||

"dev": true

|

||||

},

|

||||

"node_modules/fast-glob": {

|

||||

"version": "3.2.12",

|

||||

"resolved": "https://registry.npmjs.org/fast-glob/-/fast-glob-3.2.12.tgz",

|

||||

@ -5146,21 +5073,6 @@

|

||||

"node": ">=10.17.0"

|

||||

}

|

||||

},

|

||||

"node_modules/husky": {

|

||||

"version": "8.0.1",

|

||||

"resolved": "https://registry.npmjs.org/husky/-/husky-8.0.1.tgz",

|

||||

"integrity": "sha512-xs7/chUH/CKdOCs7Zy0Aev9e/dKOMZf3K1Az1nar3tzlv0jfqnYtu235bstsWTmXOR0EfINrPa97yy4Lz6RiKw==",

|

||||

"dev": true,

|

||||

"bin": {

|

||||

"husky": "lib/bin.js"

|

||||

},

|

||||

"engines": {

|

||||

"node": ">=14"

|

||||

},

|

||||

"funding": {

|

||||

"url": "https://github.com/sponsors/typicode"

|

||||

}

|

||||

},

|

||||

"node_modules/iconv-lite": {

|

||||

"version": "0.4.24",

|

||||

"resolved": "https://registry.npmjs.org/iconv-lite/-/iconv-lite-0.4.24.tgz",

|

||||

@ -5920,7 +5832,6 @@

|

||||

"@jest/transform": "^29.3.1",

|

||||

"@jest/types": "^29.3.1",

|

||||

"@types/babel__traverse": "^7.0.6",

|

||||

"@types/prettier": "^2.1.5",

|

||||

"babel-preset-current-node-syntax": "^1.0.0",

|

||||

"chalk": "^4.0.0",

|

||||

"expect": "^29.3.1",

|

||||

@ -6780,7 +6691,129 @@

|

||||

"treeverse",

|

||||

"validate-npm-package-name",

|

||||

"which",

|

||||

"write-file-atomic"

|

||||

"write-file-atomic",

|

||||

"@colors/colors",

|

||||

"@gar/promisify",

|

||||

"@npmcli/disparity-colors",

|

||||

"@npmcli/git",

|

||||

"@npmcli/installed-package-contents",

|

||||

"@npmcli/metavuln-calculator",

|

||||

"@npmcli/move-file",

|

||||

"@npmcli/name-from-folder",

|

||||

"@npmcli/node-gyp",

|

||||

"@npmcli/promise-spawn",

|

||||

"@npmcli/query",

|

||||

"@tootallnate/once",

|

||||

"agent-base",

|

||||

"agentkeepalive",

|

||||

"aggregate-error",

|

||||

"ansi-regex",

|

||||

"ansi-styles",

|

||||

"aproba",

|

||||

"are-we-there-yet",

|

||||

"asap",

|

||||

"balanced-match",

|

||||

"bin-links",

|

||||

"binary-extensions",

|

||||

"brace-expansion",

|

||||

"builtins",

|

||||

"cidr-regex",

|

||||

"clean-stack",

|

||||

"clone",

|

||||

"cmd-shim",

|

||||

"color-convert",

|

||||

"color-name",

|

||||

"color-support",

|

||||

"common-ancestor-path",

|

||||

"concat-map",

|

||||

"console-control-strings",

|

||||

"cssesc",

|

||||

"debug",

|

||||

"debuglog",

|

||||

"defaults",

|

||||

"delegates",

|

||||

"depd",

|

||||

"dezalgo",

|

||||

"diff",

|

||||

"emoji-regex",

|

||||

"encoding",

|

||||

"env-paths",

|

||||

"err-code",

|

||||

"fs.realpath",

|

||||

"function-bind",

|

||||

"gauge",

|

||||

"has",

|

||||

"has-flag",

|

||||

"has-unicode",

|

||||

"http-cache-semantics",

|

||||

"http-proxy-agent",

|

||||

"https-proxy-agent",

|

||||

"humanize-ms",

|

||||

"iconv-lite",

|

||||

"ignore-walk",

|

||||

"imurmurhash",

|

||||

"indent-string",

|

||||

"infer-owner",

|

||||

"inflight",

|

||||

"inherits",

|

||||

"ip",

|

||||

"ip-regex",

|

||||

"is-core-module",

|

||||

"is-fullwidth-code-point",

|

||||

"is-lambda",

|

||||

"isexe",

|

||||

"json-stringify-nice",

|

||||

"jsonparse",

|

||||

"just-diff",

|

||||

"just-diff-apply",

|

||||

"lru-cache",

|

||||

"minipass-collect",

|

||||

"minipass-fetch",

|

||||

"minipass-flush",

|

||||

"minipass-json-stream",

|

||||

"minipass-sized",

|

||||

"minizlib",

|

||||

"mute-stream",

|

||||

"negotiator",

|

||||

"normalize-package-data",

|

||||

"npm-bundled",

|

||||

"npm-normalize-package-bin",

|

||||

"npm-packlist",

|

||||

"once",

|

||||

"path-is-absolute",

|

||||

"postcss-selector-parser",

|

||||

"promise-all-reject-late",

|

||||

"promise-call-limit",

|

||||

"promise-inflight",

|

||||

"promise-retry",

|

||||

"promzard",

|

||||

"read-cmd-shim",

|

||||

"readable-stream",

|

||||

"retry",

|

||||

"safe-buffer",

|

||||

"safer-buffer",

|

||||

"set-blocking",

|

||||

"signal-exit",

|

||||

"smart-buffer",

|

||||

"socks",

|

||||

"socks-proxy-agent",

|

||||

"spdx-correct",

|

||||

"spdx-exceptions",

|

||||

"spdx-expression-parse",

|

||||

"spdx-license-ids",

|

||||

"string_decoder",

|

||||

"string-width",

|

||||

"strip-ansi",

|

||||

"supports-color",

|

||||

"unique-filename",

|

||||

"unique-slug",

|

||||

"util-deprecate",

|

||||

"validate-npm-package-license",

|

||||

"walk-up-path",

|

||||

"wcwidth",

|

||||

"wide-align",

|

||||

"wrappy",

|

||||

"yallist"

|

||||

],

|

||||

"dev": true,

|

||||

"dependencies": {

|

||||

@ -9591,33 +9624,6 @@

|

||||

"node": ">= 0.8.0"

|

||||

}

|

||||

},

|

||||

"node_modules/prettier": {

|

||||

"version": "2.7.1",

|

||||

"resolved": "https://registry.npmjs.org/prettier/-/prettier-2.7.1.tgz",

|

||||

"integrity": "sha512-ujppO+MkdPqoVINuDFDRLClm7D78qbDt0/NR+wp5FqEZOoTNAjPHWj17QRhu7geIHJfcNhRk1XVQmF8Bp3ye+g==",

|

||||

"dev": true,

|

||||

"bin": {

|

||||

"prettier": "bin-prettier.js"

|

||||

},

|

||||

"engines": {

|

||||

"node": ">=10.13.0"

|

||||

},

|

||||

"funding": {

|

||||

"url": "https://github.com/prettier/prettier?sponsor=1"

|

||||

}

|

||||

},

|

||||

"node_modules/prettier-linter-helpers": {

|

||||

"version": "1.0.0",

|

||||

"resolved": "https://registry.npmjs.org/prettier-linter-helpers/-/prettier-linter-helpers-1.0.0.tgz",

|

||||

"integrity": "sha512-GbK2cP9nraSSUF9N2XwUwqfzlAFlMNYYl+ShE/V+H8a9uNl/oUqB1w2EL54Jh0OlyRSd8RfWYJ3coVS4TROP2w==",

|

||||

"dev": true,

|

||||

"dependencies": {

|

||||

"fast-diff": "^1.1.2"

|

||||

},

|

||||

"engines": {

|

||||

"node": ">=6.0.0"

|

||||

}

|

||||

},

|

||||

"node_modules/pretty-format": {

|

||||

"version": "29.3.1",

|

||||

"resolved": "https://registry.npmjs.org/pretty-format/-/pretty-format-29.3.1.tgz",

|

||||

@ -9703,11 +9709,11 @@

|

||||

}

|

||||

},

|

||||

"node_modules/query-string": {

|

||||

"version": "7.1.1",

|

||||

"resolved": "https://registry.npmjs.org/query-string/-/query-string-7.1.1.tgz",

|

||||

"integrity": "sha512-MplouLRDHBZSG9z7fpuAAcI7aAYjDLhtsiVZsevsfaHWDS2IDdORKbSd1kWUA+V4zyva/HZoSfpwnYMMQDhb0w==",

|

||||

"version": "7.1.3",

|

||||

"resolved": "https://registry.npmjs.org/query-string/-/query-string-7.1.3.tgz",

|

||||

"integrity": "sha512-hh2WYhq4fi8+b+/2Kg9CEge4fDPvHS534aOOvOZeQ3+Vf2mCFsaFBYj0i+iXcAq6I9Vzp5fjMFBlONvayDC1qg==",

|

||||

"dependencies": {

|

||||

"decode-uri-component": "^0.2.0",

|

||||

"decode-uri-component": "^0.2.2",

|

||||

"filter-obj": "^1.1.0",

|

||||

"split-on-first": "^1.0.0",

|

||||

"strict-uri-encode": "^2.0.0"

|

||||

@ -10241,6 +10247,15 @@

|

||||

"node": ">= 0.8"

|

||||

}

|

||||

},

|

||||

"node_modules/stoppable": {

|

||||

"version": "1.1.0",

|

||||

"resolved": "https://registry.npmjs.org/stoppable/-/stoppable-1.1.0.tgz",

|

||||

"integrity": "sha512-KXDYZ9dszj6bzvnEMRYvxgeTHU74QBFL54XKtP3nyMuJ81CFYtABZ3bAzL2EdFUaEwJOBOgENyFj3R7oTzDyyw==",

|

||||

"engines": {

|

||||

"node": ">=4",

|

||||

"npm": ">=6"

|

||||

}

|

||||

},

|

||||

"node_modules/strict-uri-encode": {

|

||||

"version": "2.0.0",

|

||||

"resolved": "https://registry.npmjs.org/strict-uri-encode/-/strict-uri-encode-2.0.0.tgz",

|

||||

@ -12656,6 +12671,14 @@

|

||||

"strip-json-comments": "^3.1.1"

|

||||

}

|

||||

},

|

||||

"@godaddy/terminus": {

|

||||

"version": "4.11.2",

|

||||

"resolved": "https://registry.npmjs.org/@godaddy/terminus/-/terminus-4.11.2.tgz",

|

||||

"integrity": "sha512-e/kbOWpGKME42eltM/wXM3RxSUOrfureZxEd6Dt6NXyFoJ7E8lnmm7znXydJsL3B7ky4HRFZI+eHrep54NZbeQ==",

|

||||

"requires": {

|

||||

"stoppable": "^1.1.0"

|

||||

}

|

||||

},

|

||||

"@humanwhocodes/config-array": {

|

||||

"version": "0.11.7",

|

||||

"resolved": "https://registry.npmjs.org/@humanwhocodes/config-array/-/config-array-0.11.7.tgz",

|

||||

@ -13113,16 +13136,6 @@

|

||||

"@maxmind/geoip2-node": "^3.4.0"

|

||||

}

|

||||

},

|

||||

"@sentry/core": {

|

||||

"version": "7.17.4",

|

||||

"resolved": "https://registry.npmjs.org/@sentry/core/-/core-7.17.4.tgz",

|

||||

"integrity": "sha512-U3ABSJBKGK8dJ01nEG2+qNOb6Wv7U3VqoajiZxfV4lpPWNFGCoEhiTytxBlFTOCmdUH8209zSZiWJZaDLy+TSA==",

|

||||

"requires": {

|

||||

"@sentry/types": "7.17.4",

|

||||

"@sentry/utils": "7.17.4",

|

||||

"tslib": "^1.9.3"

|

||||

}

|

||||

},

|

||||

"@sentry/node": {

|

||||

"version": "7.19.0",

|

||||

"resolved": "https://registry.npmjs.org/@sentry/node/-/node-7.19.0.tgz",

|

||||

@ -13200,20 +13213,6 @@

|

||||

}

|

||||

}

|

||||

},

|

||||

"@sentry/types": {

|

||||

"version": "7.17.4",

|

||||

"resolved": "https://registry.npmjs.org/@sentry/types/-/types-7.17.4.tgz",

|

||||

"integrity": "sha512-QJj8vO4AtxuzQfJIzDnECSmoxwnS+WJsm1Ta2Cwdy+TUCBJyWpW7aIJJGta76zb9gNPGb3UcAbeEjhMJBJeRMQ=="

|

||||

},

|

||||

"@sentry/utils": {

|

||||

"version": "7.17.4",

|

||||

"resolved": "https://registry.npmjs.org/@sentry/utils/-/utils-7.17.4.tgz",

|

||||

"integrity": "sha512-ioG0ANy8uiWzig82/e7cc+6C9UOxkyBzJDi1luoQVDH6P0/PvM8GzVU+1iUVUipf8+OL1Jh09GrWnd5wLm3XNQ==",

|

||||

"requires": {

|

||||

"@sentry/types": "7.17.4",

|

||||

"tslib": "^1.9.3"

|

||||

}

|

||||

},

|

||||

"@sinclair/typebox": {

|

||||

"version": "0.24.51",

|

||||

"resolved": "https://registry.npmjs.org/@sinclair/typebox/-/typebox-0.24.51.tgz",

|

||||

@ -13433,12 +13432,6 @@

|

||||

"@types/node": "*"

|

||||

}

|

||||

},

|

||||

"@types/prettier": {

|

||||

"version": "2.7.1",

|

||||

"resolved": "https://registry.npmjs.org/@types/prettier/-/prettier-2.7.1.tgz",

|

||||

"integrity": "sha512-ri0UmynRRvZiiUJdiz38MmIblKK+oH30MztdBVR95dv/Ubw6neWSb8u1XpRb72L4qsZOhz+L+z9JD40SJmfWow==",

|

||||

"dev": true

|

||||

},

|

||||

"@types/qs": {

|

||||

"version": "6.9.7",

|

||||

"resolved": "https://registry.npmjs.org/@types/qs/-/qs-6.9.7.tgz",

|

||||

@ -14219,9 +14212,9 @@

|

||||

}

|

||||

},

|

||||

"decode-uri-component": {

|

||||

"version": "0.2.0",

|

||||

"resolved": "https://registry.npmjs.org/decode-uri-component/-/decode-uri-component-0.2.0.tgz",

|

||||

"integrity": "sha512-hjf+xovcEn31w/EUYdTXQh/8smFL/dzYjohQGEIgjyNavaJfBY2p5F527Bo1VPATxv0VYTUC2bOcXvqFwk78Og=="

|

||||

"version": "0.2.2",

|

||||

"resolved": "https://registry.npmjs.org/decode-uri-component/-/decode-uri-component-0.2.2.tgz",

|

||||

"integrity": "sha512-FqUYQ+8o158GyGTrMFJms9qh3CqTKvAqgqsTnkLI8sKu0028orqBhxNMFkFen0zGyg6epACD32pjVk58ngIErQ=="

|

||||

},

|

||||

"dedent": {

|

||||

"version": "0.7.0",

|

||||

@ -14429,22 +14422,6 @@

|

||||

}

|

||||

}

|

||||

},

|

||||

"eslint-config-prettier": {

|

||||

"version": "8.5.0",

|

||||

"resolved": "https://registry.npmjs.org/eslint-config-prettier/-/eslint-config-prettier-8.5.0.tgz",

|

||||

"integrity": "sha512-obmWKLUNCnhtQRKc+tmnYuQl0pFU1ibYJQ5BGhTVB08bHe9wC8qUeG7c08dj9XX+AuPj1YSGSQIHl1pnDHZR0Q==",

|

||||

"dev": true,

|

||||

"requires": {}

|

||||

},

|

||||

"eslint-plugin-prettier": {

|

||||

"version": "4.2.1",

|

||||

"resolved": "https://registry.npmjs.org/eslint-plugin-prettier/-/eslint-plugin-prettier-4.2.1.tgz",

|

||||

"integrity": "sha512-f/0rXLXUt0oFYs8ra4w49wYZBG5GKZpAYsJSm6rnYL5uVDjd+zowwMwVZHnAjf4edNrKpCDYfXDgmRE/Ak7QyQ==",

|

||||

"dev": true,

|

||||

"requires": {

|

||||

"prettier-linter-helpers": "^1.0.0"

|

||||

}

|

||||

},

|

||||

"eslint-scope": {

|

||||

"version": "5.1.1",

|

||||

"resolved": "https://registry.npmjs.org/eslint-scope/-/eslint-scope-5.1.1.tgz",

|

||||

@ -14667,12 +14644,6 @@

|

||||

"integrity": "sha512-f3qQ9oQy9j2AhBe/H9VC91wLmKBCCU/gDOnKNAYG5hswO7BLKj09Hc5HYNz9cGI++xlpDCIgDaitVs03ATR84Q==",

|

||||

"dev": true

|

||||

},

|

||||

"fast-diff": {

|

||||

"version": "1.2.0",

|

||||

"resolved": "https://registry.npmjs.org/fast-diff/-/fast-diff-1.2.0.tgz",

|

||||

"integrity": "sha512-xJuoT5+L99XlZ8twedaRf6Ax2TgQVxvgZOYoPKqZufmJib0tL2tegPBOZb1pVNgIhlqDlA0eO0c3wBvQcmzx4w==",

|

||||

"dev": true

|

||||

},

|

||||

"fast-glob": {

|

||||

"version": "3.2.12",

|

||||

"resolved": "https://registry.npmjs.org/fast-glob/-/fast-glob-3.2.12.tgz",

|

||||

@ -15034,12 +15005,6 @@

|

||||

"integrity": "sha512-B4FFZ6q/T2jhhksgkbEW3HBvWIfDW85snkQgawt07S7J5QXTk6BkNV+0yAeZrM5QpMAdYlocGoljn0sJ/WQkFw==",

|

||||

"dev": true

|

||||

},

|

||||

"husky": {

|

||||

"version": "8.0.1",

|

||||

"resolved": "https://registry.npmjs.org/husky/-/husky-8.0.1.tgz",

|

||||

"integrity": "sha512-xs7/chUH/CKdOCs7Zy0Aev9e/dKOMZf3K1Az1nar3tzlv0jfqnYtu235bstsWTmXOR0EfINrPa97yy4Lz6RiKw==",

|

||||

"dev": true

|

||||

},

|

||||

"iconv-lite": {

|

||||

"version": "0.4.24",

|

||||

"resolved": "https://registry.npmjs.org/iconv-lite/-/iconv-lite-0.4.24.tgz",

|

||||

@ -15603,7 +15568,6 @@

|

||||

"@jest/transform": "^29.3.1",

|

||||

"@jest/types": "^29.3.1",

|

||||

"@types/babel__traverse": "^7.0.6",

|

||||

"@types/prettier": "^2.1.5",

|

||||

"babel-preset-current-node-syntax": "^1.0.0",

|

||||

"chalk": "^4.0.0",

|

||||

"expect": "^29.3.1",

|

||||

@ -18246,21 +18210,6 @@

|

||||

"integrity": "sha512-vkcDPrRZo1QZLbn5RLGPpg/WmIQ65qoWWhcGKf/b5eplkkarX0m9z8ppCat4mlOqUsWpyNuYgO3VRyrYHSzX5g==",

|

||||

"dev": true

|

||||

},

|

||||

"prettier": {

|

||||

"version": "2.7.1",

|

||||

"resolved": "https://registry.npmjs.org/prettier/-/prettier-2.7.1.tgz",

|

||||

"integrity": "sha512-ujppO+MkdPqoVINuDFDRLClm7D78qbDt0/NR+wp5FqEZOoTNAjPHWj17QRhu7geIHJfcNhRk1XVQmF8Bp3ye+g==",

|

||||

"dev": true

|

||||

},

|

||||

"prettier-linter-helpers": {

|

||||

"version": "1.0.0",

|

||||

"resolved": "https://registry.npmjs.org/prettier-linter-helpers/-/prettier-linter-helpers-1.0.0.tgz",

|

||||

"integrity": "sha512-GbK2cP9nraSSUF9N2XwUwqfzlAFlMNYYl+ShE/V+H8a9uNl/oUqB1w2EL54Jh0OlyRSd8RfWYJ3coVS4TROP2w==",

|

||||

"dev": true,

|

||||

"requires": {

|

||||

"fast-diff": "^1.1.2"

|

||||

}

|

||||

},

|

||||

"pretty-format": {

|

||||

"version": "29.3.1",

|

||||

"resolved": "https://registry.npmjs.org/pretty-format/-/pretty-format-29.3.1.tgz",

|

||||

@ -18324,11 +18273,11 @@

|

||||

}

|

||||

},

|

||||

"query-string": {

|

||||

"version": "7.1.1",

|

||||

"resolved": "https://registry.npmjs.org/query-string/-/query-string-7.1.1.tgz",

|

||||

"integrity": "sha512-MplouLRDHBZSG9z7fpuAAcI7aAYjDLhtsiVZsevsfaHWDS2IDdORKbSd1kWUA+V4zyva/HZoSfpwnYMMQDhb0w==",

|

||||

"version": "7.1.3",

|

||||

"resolved": "https://registry.npmjs.org/query-string/-/query-string-7.1.3.tgz",

|

||||

"integrity": "sha512-hh2WYhq4fi8+b+/2Kg9CEge4fDPvHS534aOOvOZeQ3+Vf2mCFsaFBYj0i+iXcAq6I9Vzp5fjMFBlONvayDC1qg==",

|

||||

"requires": {

|

||||

"decode-uri-component": "^0.2.0",

|

||||

"decode-uri-component": "^0.2.2",

|

||||

"filter-obj": "^1.1.0",

|

||||

"split-on-first": "^1.0.0",

|

||||

"strict-uri-encode": "^2.0.0"

|

||||

@ -18710,6 +18659,11 @@

|

||||

"resolved": "https://registry.npmjs.org/statuses/-/statuses-2.0.1.tgz",

|

||||

"integrity": "sha512-RwNA9Z/7PrK06rYLIzFMlaF+l73iwpzsqRIFgbMLbTcLD6cOao82TaWefPXQvB2fOC4AjuYSEndS7N/mTCbkdQ=="

|

||||

},

|

||||

"stoppable": {

|

||||

"version": "1.1.0",

|

||||

"resolved": "https://registry.npmjs.org/stoppable/-/stoppable-1.1.0.tgz",

|

||||

"integrity": "sha512-KXDYZ9dszj6bzvnEMRYvxgeTHU74QBFL54XKtP3nyMuJ81CFYtABZ3bAzL2EdFUaEwJOBOgENyFj3R7oTzDyyw=="

|

||||

},

|

||||

"strict-uri-encode": {

|

||||

"version": "2.0.0",

|

||||

"resolved": "https://registry.npmjs.org/strict-uri-encode/-/strict-uri-encode-2.0.0.tgz",

|

||||

|

||||

@ -1,5 +1,6 @@

|

||||

{

|

||||

"dependencies": {

|

||||

"@godaddy/terminus": "^4.11.2",

|

||||

"@sentry/node": "^7.14.0",

|

||||

"@sentry/tracing": "^7.19.0",

|

||||

"@types/crypto-js": "^4.1.1",

|

||||

@ -19,7 +20,7 @@

|

||||

"mongoose": "^6.7.2",

|

||||

"nodemailer": "^6.8.0",

|

||||

"posthog-node": "^2.1.0",

|

||||

"query-string": "^7.1.1",

|

||||

"query-string": "^7.1.3",

|

||||

"rimraf": "^3.0.2",

|

||||

"stripe": "^10.7.0",

|

||||

"tweetnacl": "^1.0.3",

|

||||

@ -30,12 +31,13 @@

|

||||

"version": "1.0.0",

|

||||

"main": "src/index.js",

|

||||

"scripts": {

|

||||

"prepare": "cd .. && npm install",

|

||||

"start": "npm run build && node build/index.js",

|

||||

"dev": "nodemon",

|

||||

"build": "rimraf ./build && tsc && cp -R ./src/templates ./src/json ./build",

|

||||

"build": "rimraf ./build && tsc && cp -R ./src/templates ./build",

|

||||

"lint": "eslint . --ext .ts",

|

||||

"lint-and-fix": "eslint . --ext .ts --fix",

|

||||

"prettier-format": "prettier --config .prettierrc 'src/**/*.ts' --write"

|

||||

"lint-staged": "lint-staged"

|

||||

},

|

||||

"repository": {

|

||||

"type": "git",

|

||||

@ -61,14 +63,10 @@

|

||||

"@typescript-eslint/eslint-plugin": "^5.40.1",

|

||||

"@typescript-eslint/parser": "^5.40.1",

|

||||

"eslint": "^8.26.0",

|

||||

"eslint-config-prettier": "^8.5.0",

|

||||

"eslint-plugin-prettier": "^4.2.1",

|

||||

"husky": "^8.0.1",

|

||||

"install": "^0.13.0",

|

||||

"jest": "^29.3.1",

|

||||

"nodemon": "^2.0.19",

|

||||

"npm": "^8.19.3",

|

||||

"prettier": "^2.7.1",

|

||||

"ts-node": "^10.9.1"

|

||||

}

|

||||

}

|

||||

|

||||

@ -10,15 +10,23 @@ const JWT_SIGNUP_LIFETIME = process.env.JWT_SIGNUP_LIFETIME! || '15m';

|

||||

const JWT_SIGNUP_SECRET = process.env.JWT_SIGNUP_SECRET!;

|

||||

const MONGO_URL = process.env.MONGO_URL!;

|

||||

const NODE_ENV = process.env.NODE_ENV! || 'production';

|

||||

const OAUTH_CLIENT_SECRET_HEROKU = process.env.OAUTH_CLIENT_SECRET_HEROKU!;

|

||||

const OAUTH_TOKEN_URL_HEROKU = process.env.OAUTH_TOKEN_URL_HEROKU!;

|

||||

const CLIENT_SECRET_HEROKU = process.env.CLIENT_SECRET_HEROKU!;

|

||||

const CLIENT_ID_HEROKU = process.env.CLIENT_ID_HEROKU!;

|

||||

const CLIENT_ID_VERCEL = process.env.CLIENT_ID_VERCEL!;

|

||||

const CLIENT_ID_NETLIFY = process.env.CLIENT_ID_NETLIFY!;

|

||||

const CLIENT_SECRET_VERCEL = process.env.CLIENT_SECRET_VERCEL!;

|

||||

const CLIENT_SECRET_NETLIFY = process.env.CLIENT_SECRET_NETLIFY!;

|

||||

const CLIENT_SLUG_VERCEL= process.env.CLIENT_SLUG_VERCEL!;

|

||||

const POSTHOG_HOST = process.env.POSTHOG_HOST! || 'https://app.posthog.com';

|

||||

const POSTHOG_PROJECT_API_KEY = process.env.POSTHOG_PROJECT_API_KEY! || 'phc_nSin8j5q2zdhpFDI1ETmFNUIuTG4DwKVyIigrY10XiE';

|

||||

const POSTHOG_PROJECT_API_KEY =

|

||||

process.env.POSTHOG_PROJECT_API_KEY! ||

|

||||

'phc_nSin8j5q2zdhpFDI1ETmFNUIuTG4DwKVyIigrY10XiE';

|

||||

const PRIVATE_KEY = process.env.PRIVATE_KEY!;

|

||||

const PUBLIC_KEY = process.env.PUBLIC_KEY!;

|

||||

const SENTRY_DSN = process.env.SENTRY_DSN!;

|

||||

const SITE_URL = process.env.SITE_URL!;

|

||||

const SMTP_HOST = process.env.SMTP_HOST! || 'smtp.gmail.com';

|

||||

const SMTP_PORT = process.env.SMTP_PORT! || 587;

|

||||

const SMTP_NAME = process.env.SMTP_NAME!;

|

||||

const SMTP_USERNAME = process.env.SMTP_USERNAME!;

|

||||

const SMTP_PASSWORD = process.env.SMTP_PASSWORD!;

|

||||

@ -28,38 +36,44 @@ const STRIPE_PRODUCT_STARTER = process.env.STRIPE_PRODUCT_STARTER!;

|

||||

const STRIPE_PUBLISHABLE_KEY = process.env.STRIPE_PUBLISHABLE_KEY!;

|

||||

const STRIPE_SECRET_KEY = process.env.STRIPE_SECRET_KEY!;

|

||||

const STRIPE_WEBHOOK_SECRET = process.env.STRIPE_WEBHOOK_SECRET!;

|

||||

const TELEMETRY_ENABLED = (process.env.TELEMETRY_ENABLED! !== 'false') && true;

|

||||

const TELEMETRY_ENABLED = process.env.TELEMETRY_ENABLED! !== 'false' && true;

|

||||

|

||||

export {

|

||||

PORT,

|

||||

EMAIL_TOKEN_LIFETIME,

|

||||

ENCRYPTION_KEY,

|

||||

JWT_AUTH_LIFETIME,

|

||||

JWT_AUTH_SECRET,

|

||||

JWT_REFRESH_LIFETIME,

|

||||

JWT_REFRESH_SECRET,

|

||||

JWT_SERVICE_SECRET,

|

||||

JWT_SIGNUP_LIFETIME,

|

||||

JWT_SIGNUP_SECRET,

|

||||

MONGO_URL,

|

||||

NODE_ENV,

|

||||

OAUTH_CLIENT_SECRET_HEROKU,

|

||||

OAUTH_TOKEN_URL_HEROKU,

|

||||

POSTHOG_HOST,

|

||||

POSTHOG_PROJECT_API_KEY,

|

||||

PRIVATE_KEY,

|

||||

PUBLIC_KEY,

|

||||

SENTRY_DSN,

|

||||

SITE_URL,

|

||||

SMTP_HOST,

|

||||

SMTP_NAME,

|

||||

SMTP_USERNAME,

|

||||

SMTP_PASSWORD,

|

||||

STRIPE_PRODUCT_CARD_AUTH,

|

||||

STRIPE_PRODUCT_PRO,

|

||||

STRIPE_PRODUCT_STARTER,

|

||||

STRIPE_PUBLISHABLE_KEY,

|

||||

STRIPE_SECRET_KEY,

|

||||

STRIPE_WEBHOOK_SECRET,

|

||||

TELEMETRY_ENABLED

|

||||

PORT,

|

||||

EMAIL_TOKEN_LIFETIME,

|

||||

ENCRYPTION_KEY,

|

||||

JWT_AUTH_LIFETIME,

|

||||

JWT_AUTH_SECRET,

|

||||

JWT_REFRESH_LIFETIME,

|

||||

JWT_REFRESH_SECRET,

|

||||

JWT_SERVICE_SECRET,

|

||||

JWT_SIGNUP_LIFETIME,

|

||||

JWT_SIGNUP_SECRET,

|

||||

MONGO_URL,

|

||||

NODE_ENV,

|

||||

CLIENT_ID_HEROKU,

|

||||

CLIENT_ID_VERCEL,

|

||||

CLIENT_ID_NETLIFY,

|

||||

CLIENT_SECRET_HEROKU,

|

||||

CLIENT_SECRET_VERCEL,

|

||||

CLIENT_SECRET_NETLIFY,

|

||||

CLIENT_SLUG_VERCEL,

|

||||

POSTHOG_HOST,

|

||||

POSTHOG_PROJECT_API_KEY,

|

||||

PRIVATE_KEY,

|

||||

PUBLIC_KEY,

|

||||

SENTRY_DSN,

|

||||

SITE_URL,

|

||||

SMTP_HOST,

|

||||

SMTP_PORT,

|

||||

SMTP_NAME,

|

||||

SMTP_USERNAME,

|

||||

SMTP_PASSWORD,

|

||||

STRIPE_PRODUCT_CARD_AUTH,

|

||||

STRIPE_PRODUCT_PRO,

|

||||

STRIPE_PRODUCT_STARTER,

|

||||

STRIPE_PUBLISHABLE_KEY,

|

||||

STRIPE_SECRET_KEY,

|

||||

STRIPE_WEBHOOK_SECRET,

|

||||

TELEMETRY_ENABLED

|

||||

};

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

/* eslint-disable @typescript-eslint/no-var-requires */

|

||||

import { Request, Response } from 'express';

|

||||

import jwt from 'jsonwebtoken';

|

||||

import * as Sentry from '@sentry/node';

|

||||

@ -5,17 +6,17 @@ import * as bigintConversion from 'bigint-conversion';

|

||||

const jsrp = require('jsrp');

|

||||

import { User } from '../models';

|

||||

import { createToken, issueTokens, clearTokens } from '../helpers/auth';

|

||||

import {

|

||||

NODE_ENV,

|

||||

JWT_AUTH_LIFETIME,

|

||||

JWT_AUTH_SECRET,

|

||||

JWT_REFRESH_SECRET

|

||||

import {

|

||||

NODE_ENV,

|

||||

JWT_AUTH_LIFETIME,

|

||||

JWT_AUTH_SECRET,

|

||||

JWT_REFRESH_SECRET

|

||||

} from '../config';

|

||||

|

||||

declare module 'jsonwebtoken' {

|

||||

export interface UserIDJwtPayload extends jwt.JwtPayload {

|

||||

userId: string;

|

||||

}

|

||||

export interface UserIDJwtPayload extends jwt.JwtPayload {

|

||||

userId: string;

|

||||

}

|

||||

}

|

||||

|

||||

const clientPublicKeys: any = {};

|

||||

@ -27,47 +28,45 @@ const clientPublicKeys: any = {};

|

||||

* @returns

|

||||

*/

|

||||

export const login1 = async (req: Request, res: Response) => {

|

||||

try {

|

||||

const {

|

||||

email,

|

||||

clientPublicKey

|

||||

}: { email: string; clientPublicKey: string } = req.body;

|

||||

|

||||

const user = await User.findOne({

|

||||

email

|

||||

}).select('+salt +verifier');

|

||||

|

||||

try {

|

||||

const {

|

||||

email,

|

||||

clientPublicKey

|

||||

}: { email: string; clientPublicKey: string } = req.body;

|

||||

|

||||

if (!user) throw new Error('Failed to find user');

|

||||

const user = await User.findOne({

|

||||

email

|

||||

}).select('+salt +verifier');

|

||||